[Avg. reading time: 0 minutes]

[Avg. reading time: 0 minutes]

Disclaimer

[Avg. reading time: 4 minutes]

Required Tools

-

CLI

-

Python (3.11 to 3.13)

-

Python Dependency Manager (choose one)

-

Code Editor

-

VSCode Extension

-

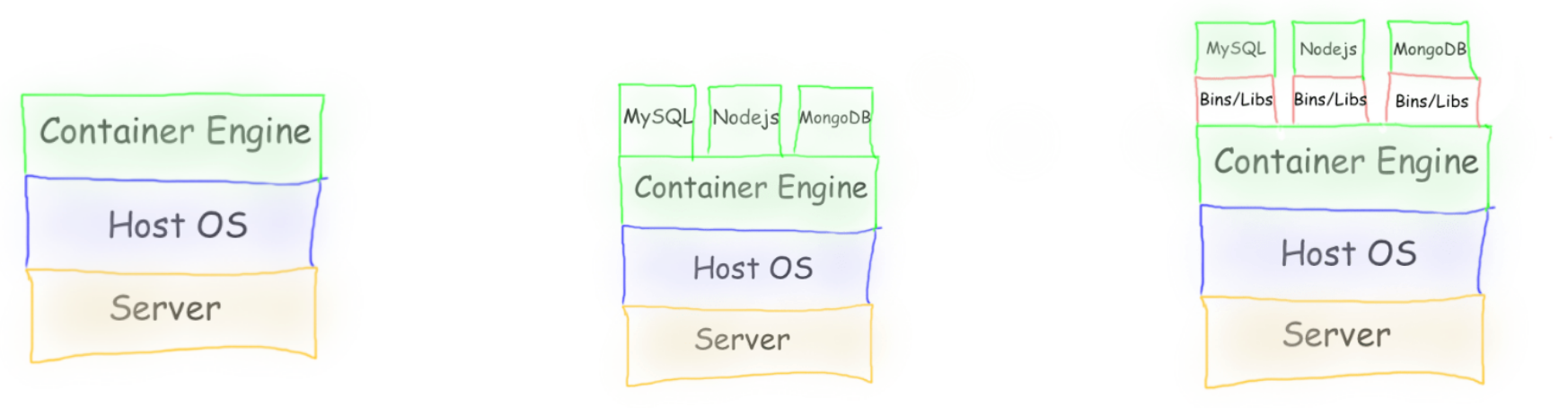

Container Engine

Free Cloud Services

| Tool | Purpose | Link |

|---|---|---|

| Databricks Free Edition | ML & Ops | Free Signup |

| Chroma | Free Vector DB | ChromaDB |

#tools #databricks #python #git

[Avg. reading time: 2 minutes]

MLOps & AI Overview

- MLOps & AI Overview

[Avg. reading time: 3 minutes]

Introduction

AI/ML are no longer just research topics - they drive industry, innovation, and jobs.

GenAI has shifted expectations: businesses want faster solutions with production-grade reliability.

MLOps ensures ideas → working models → deployed systems.

Evolution of the Field

2010s: Big Data + early ML adoption (scikit-learn, Spark MLlib).

2015-2022: Deep learning boom (Neural Networks, NLP with BERT).

2022: Generative AI (GPT, diffusion models).

MLOps is critical for scaling, governance, monitoring.

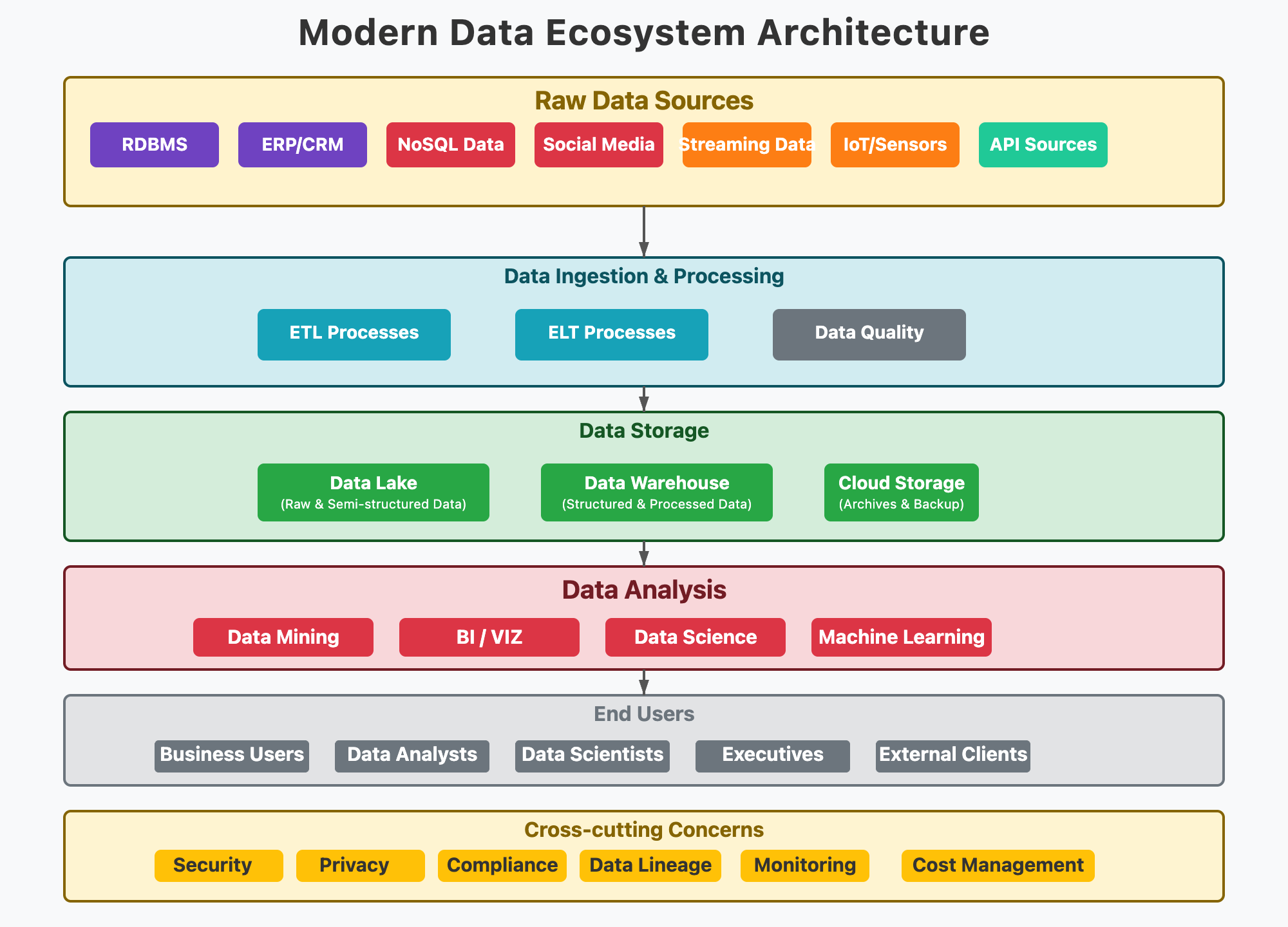

Where MLOps Fits in the Data/AI Journey

MLOps is part of all of this.

Without MLOps, many models stay as “academic projects.”

Today’s hiring market looks for hybrid skills (data + ML + cloud + ops).

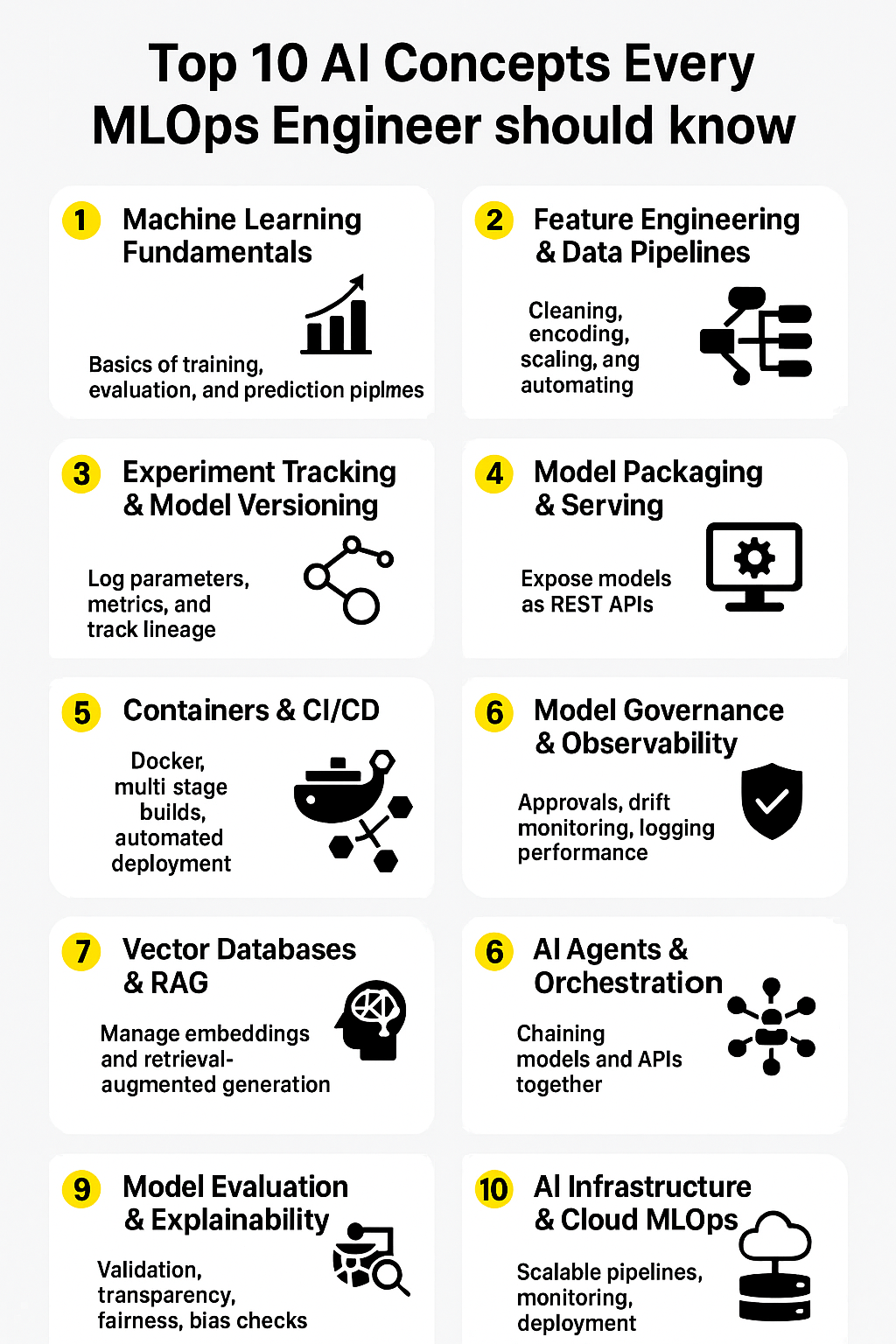

Course Positioning

Not too heavy on topics covered in other courses such as ML algorithms or NLP or Deep Learning or LLM.

This course is heavy on CICD - MLOps, Pipelines, versioning, monitoring, cloud platforms and related toolsets.

Course Focus = Industry Readiness

[Avg. reading time: 0 minutes]

AI then and now

#MachineLearning #ArtificialIntelligence

[Avg. reading time: 1 minute]

Expert Systems

Early AI systems (1970s–1990s)

Rule-based: encode human expert knowledge as if-then rules.

Precursor to modern ML, focused on symbolic reasoning rather than data-driven learning.

Pros

- Transparent and explainable (rules are visible).

- Effective in narrow, well-defined domains.

Cons

- Knowledge engineering is labor-intensive.

- Doesn’t scale well as rules explode.

- Cannot adapt automatically from new data.

[Avg. reading time: 3 minutes]

Fuzzy Logic

Logic that allows degrees of truth (not just True/False). Models uncertainty with values between 0 and 1.

graph TD

A["Is it Cold?"] --> B["Crisp Logic<br/>Yes = 1<br/>No = 0"]

A --> C["Fuzzy Logic<br/>Maybe Cold = 0.3<br/>Not really cold = 0.7"]

Useful in control systems and decision-making under vagueness.

Still used in various use cases to find out similarity like New Jersey similar to Jersey.

Pros

- Handles imprecise, uncertain, or linguistic data (“high temperature”, “low risk”).

- Good for rule-based control.

Cons

- Not data-driven → rules must be defined manually.

- Limited learning ability compared to ML.

Use Cases

- Washing machines that adjust cycles based on “fuzziness” of dirt level.

- Air conditioning systems adapting to “comfort level”.

- Automotive control (braking, transmission).

- Risk assessment systems.

[Avg. reading time: 3 minutes]

Machine Learning

A subset of AI where systems learn patterns from data and make predictions or decisions without being explicitly programmed.

-

One of the core pillars of AI.

-

Between traditional rule-based systems (Expert Systems) and modern Deep Learning/GenAI.

-

Provides the foundation for many practical AI applications used in industry today.

Pros

- Automates decision-making at scale.

- Flexible: can be applied to structured and unstructured data.

- Improves with more data and better features.

Cons

- Requires labeled data (for supervised learning).

- Models can overfit or underfit if not designed carefully.

- Often seen as a “black box” with limited interpretability.

Use Cases

- Fraud detection in finance.

- Customer churn prediction in telecom/retail.

- Demand forecasting in supply chain.

- Email spam filtering.

- Customer segmentation for targeted marketing.

- Market basket analysis (“people who buy X also buy Y”).

- Anomaly detection in cybersecurity and IoT.

#Supervised #Unsupervised #classification #regression

[Avg. reading time: 3 minutes]

Generative AI

A class of AI that can create new content (text, code, images, video, music) rather than just predicting outcomes.

Powered by foundation models like GPT, Stable Diffusion, etc.

-

Builds on Deep Learning + NLP + multimodal modeling.

-

Represents the shift from discriminative models (predicting) to generative models (creating).

Pros

- Enables creativity and automation at scale.

- Reduces time to draft, design, or brainstorm.

Cons

- Can hallucinate false information.

- High computational cost and environmental footprint.

- Raises copyright, ethics, and bias concerns.

Use Cases

- Text: AI writing assistants, code copilots.

- Image/video: marketing content generation, design prototyping.

- Data: generating synthetic data for ML training.

- Education: personalized learning materials and quizzes.

Key differences

| Traditional ML | Generative AI |

|---|---|

| Predicts outcome from features | Produces new content |

| Needs task-specific data | Pretrained on massive corpora |

| Optimized for accuracy | Optimized for creativity, coherence |

| Example: Predict churn | Example: Generate flying pigs/elephant |

[Avg. reading time: 4 minutes]

Reinforcement Learning

RLHF (Reinforcement Learning Human Feedback)

Its like humans learning todo and not todo.

A learning paradigm where an agent interacts with an environment, takes actions, and learns from reward signals.

Instead of labeled data, it uses trial-and-error feedback.

Complements supervised/unsupervised learning.

Strongly linked to decision-making and control tasks.

Example: YT recommends a video, if you watch it system understands that, if you choose don’t show this system reacts to that.

Here the agent is YT recommendation engine, action: user watching or ignoring the video. Rewards like/share or not-interested.

Pros

- Handles complex sequential decisions.

- Can learn optimal strategies without explicit rules.

- Mimics human/animal learning.

Cons

- Data and compute intensive.

- Reward design is tricky.

- Training can be unstable.

Use Cases

- Game AI: AlphaGo defeating world champions.

- Robotics: teaching robots to walk, grasp, or navigate.

- Finance: algorithmic trading strategies.

- Dynamic pricing in e-commerce.

flowchart TD

A[Prompt] --> B[Base LLM generates multiple responses]

B --> C[Human labelers rank responses]

C --> D[Reward Model learns preferences]

D --> E[Fine-tune LLM with Reinforcement Learning]

E --> F[Aligned ChatGPT]

[Avg. reading time: 4 minutes]

Agentic AI

AI systems that are autonomous agents: they can plan, reason, take actions, and use tools.

Builds on LLMs + RL concepts.

Can execute multi-step tasks with minimal human guidance.

Before Agentic AI

- Traditional AI -> task-specific models.

- LLMs -> good at generating text but mostly passive responders.

Transformation with Agentic AI

- Adds agency: memory, planning, acting.

- Can chain multiple AI capabilities (search + reasoning + action).

Pros

- Automates workflows end-to-end.

- Adaptable across domains.

- Learns from feedback loops.

Cons

- Hard to control (hallucinations, unsafe actions).

- High computational cost.

- Reliability and governance still open challenges.

Use Cases

- AI agents booking travel (search -> compare -> purchase).

- Customer support bots that escalate only when needed.

- Business process automation (invoice handling, data entry).

| Aspect | AI Assistant (Chatbot/LLM) | Agentic AI (Autonomous Agent) |

|---|---|---|

| Nature | Reactive → answers questions | Proactive → plans and executes tasks |

| Memory | Limited to current session | Has memory across interactions |

| Actions | Generates text/code only | Uses tools, APIs, external systems |

| Planning | One-shot response | Multi-step reasoning and decision-making |

| Adaptability | Needs explicit user prompts | Self-adjusts based on goals and feedback |

| Example Use Case | “What’s the weather in NYC?” → gives forecast | “Plan my weekend trip to NYC” → books flight, hotel, creates itinerary |

| Industry Example | Customer support FAQ bot | AI agent that handles returns, refunds, and escalations automatically |

[Avg. reading time: 3 minutes]

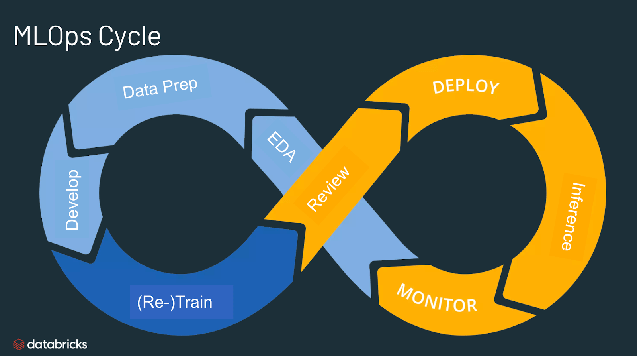

MLOps

Why MLOps

Operationalizing ML/AI models with focus on automation, collaboration, and reliability.

Building is easy, sustaining is hard.

Remember dieting/excercise?

- Companies moved past “build model in Jupyter” → now productionize models.

- 80% of ML projects fail due to lack of deployment + monitoring strategy.

- MLOps bridges Data → Model → Production.

Industry requirement

- Versioning models

- Monitoring drift

- Scalable deployment

- Regulatory compliance (audit trail, lineage)

Lifecycle

- Data ingestion -> data validation & quality checks -> feature engineering

- Model training -> validation -> experiment tracking & versioning

- Deployment (batch, real-time, API) -> rollback capabilities

- Monitoring

- Data drift (input distribution)

- Model drift (prediction accuracy)

- Concept drift (feature:label relationship)

- Infrastructure performance

- Continuous improvement -> retraining & iteration

Cross-Functional Teams

- Data Engineers

- Data Scientists

- ML Engineers

- Platform/DevOps Engineers

- Product Managers

Key Capabilities

- Reproducibility

- Scalability

- Governance & compliance

- Automated CI/CD pipelines

#cicd #mlops #devops #medallion

[Avg. reading time: 1 minute]

Differences across AI/ML systems

| Aspect | Traditional ML | NLP (Pre-GenAI) | GenAI | MLOps |

|---|---|---|---|---|

| Data | Structured, tabular | Text, tokens | Multi-modal | Any |

| Training | From small datasets | Task-specific corpora | Massive pretraining + fine-tune | Not about training, about lifecycle |

| Output | Prediction | Classification, tagging, parsing | Content (text, code, image) | Deployment + Ops |

| Role Focus | Data Scientist | NLP Researcher | Prompt Engineer, LLM Engineer | ML Engineer, Platform Eng. |

[Avg. reading time: 2 minutes]

Examples

Retail:

- Traditional ML -> Demand forecasting.

- GenAI -> Personalized product descriptions.

- MLOps -> Continuous retraining as seasons change.

Healthcare:

- Traditional ML -> Predict patient readmission.

- GenAI -> Auto-generate clinical notes.

- MLOps -> Ensure compliance & monitoring under HIPAA.

Finance:

- Traditional ML -> Fraud detection.

- GenAI -> AI-powered customer chatbots.

- MLOps -> Drift detection for fraud models.

| Traditional ML | GenAI | MLOps |

|---|---|---|

| Fraud detection (transaction classification) | AI-powered customer chatbots for support | Drift detection & alerts for fraud models |

| Credit scoring (loan approval risk models) | Personalized financial advice reports | Automated retraining with new credit bureau data |

| Stock price trend prediction | Summarizing financial reports & earnings calls | Compliance monitoring (audit trails for regulators) |

| Customer lifetime value prediction | Generating personalized investment recommendations | Model versioning & rollback in case of errors |

#finance #healthcare #retail #examples

[Avg. reading time: 1 minute]

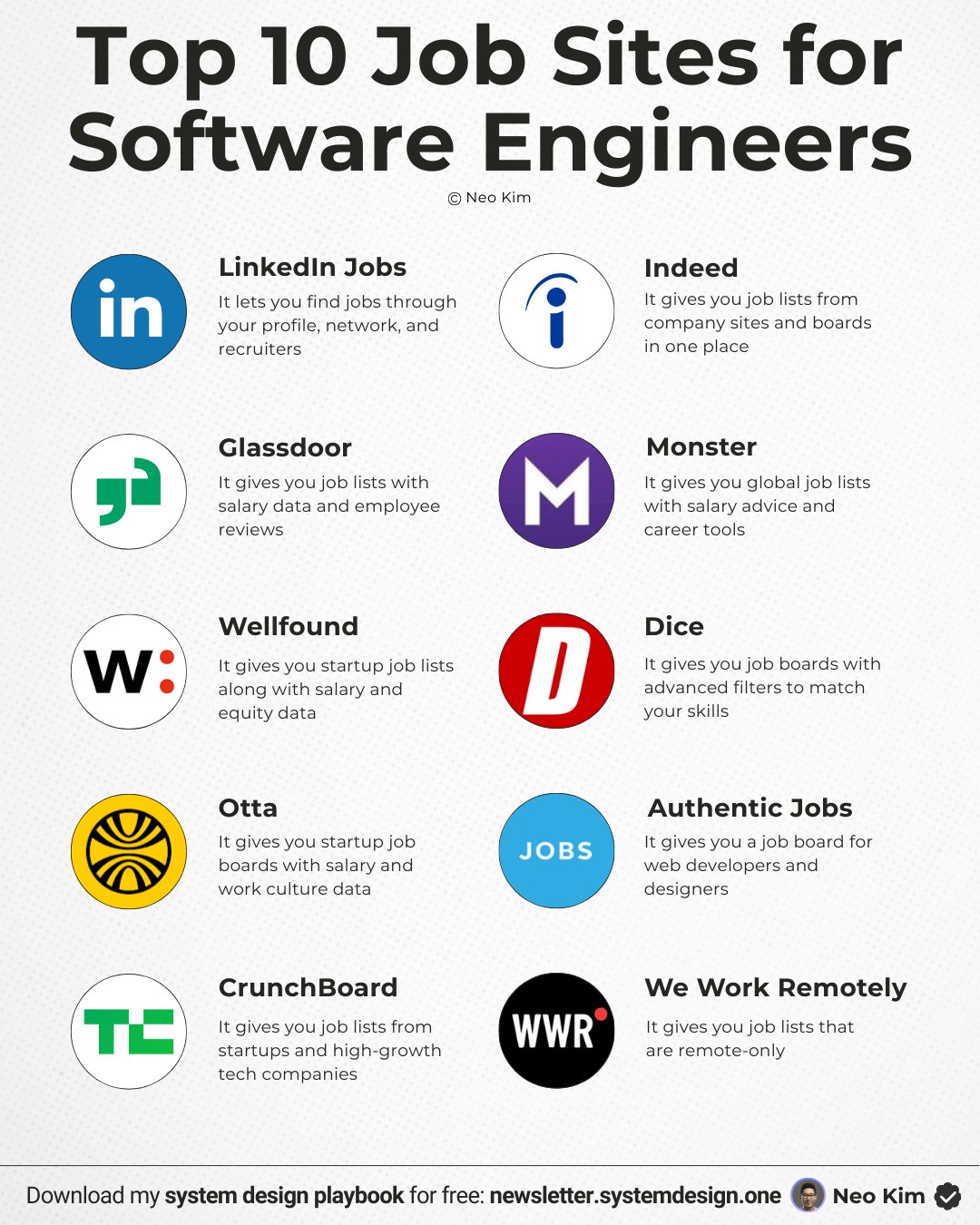

Job Opportunities

Traditional ML

- Data Scientist

- Applied ML Engineer

- Data Analyst -> ML transition

GenAI

- Prompt Engineer

- LLM Application Developer

- GenAI Product Engineer

- AI Research Scientist

MLOps

- ML Engineer (deployment, monitoring)

- MLOps Engineer (CI/CD pipelines for ML)

- Cloud ML Platform Engineer (Databricks, AWS Sagemaker, GCP Vertex AI, Azure ML)

#jobs #mlengineer #mlopsengineer

[Avg. reading time: 9 minutes]

Terms to Know

Regression

Predicting a continuous numeric value.

Use Case: Predicting house prices based on size, location, and number of rooms.

Linear Regression

A regression model assuming a straight-line relationship between input features and target.

Use Case: Estimating sales revenue as a function of advertising spend.

Classification

Predicting discrete categories.

Use Case: Classifying an email as spam or not spam.

Clustering

Grouping similar data points without labels.

Use Case: Segmenting unknown data into groups.

Feature Engineering

Creating new meaningful features from raw data to improve model performance.

Use Case: From “Date of Birth” → create “Age” as a feature for predicting insurance risk.

Overfitting

Model learns training data too well (including noise) -> poor generalization.

Use Case: Overfitting = a spam filter that memorizes training emails but fails on new ones.

Underfitting

Model too simple to capture patterns -> poor performance.

Use Case: Trying to predict house prices using only the average price (ignoring size, location, rooms, etc.).

Bias

A source of error that happens due to overly simplistic assumptions.

- Leads to underfitting.

Variance

A source of error that happens due to too much sensitivity to training data fluctuations.

- Leads to overfitting.

Model Drift

When a model’s performance degrades over time because data distribution changes.

Use Case: A churn model trained pre-pandemic performs poorly after online behavior changes drastically.

MSE

Mean Squared Error

Avg of the squared differences between predicted values and actual values.

Actual a: [10, 20, 30, 40, 50]

Predicted p : [12, 18, 25, 45, 60]

| i | Actual| Predicted | Error | Squared Error |

| - | ------|-----------|-------|---------------|

| 1 | 10 | 12 | -2 | 4 |

| 2 | 20 | 18 | 2 | 4 |

| 3 | 30 | 25 | 5 | 25 |

| 4 | 40 | 45 | -5 | 25 |

| 5 | 50 | 60 | -10 | 100 |

SS = 4 + 4 + 25 + 25 + 100 = 158

MSE (ss_res) = 158 / 5 = 31.6

R Square

Proportion of variane in the target explained by the model.

1.0 = Perfect Prediction. 0.0 = Model is no better than predicting the mean. Negative = Model is worse than just predicting the mean.

Mean of actual values = (10 + 20 + 30 + 40 + 50) / 5 = 30

Total Variation (ss_tot) : (10 - 30)^2 + (30 - 30)^2 + (40 - 30)^2 + (50 - 30)^2 = 400 + 100 + 0 + 100 + 400 = 1000

R^2 = 1 - (ss_res / ss_tot)

R^2 = 1 - (158/1000) = 0.842

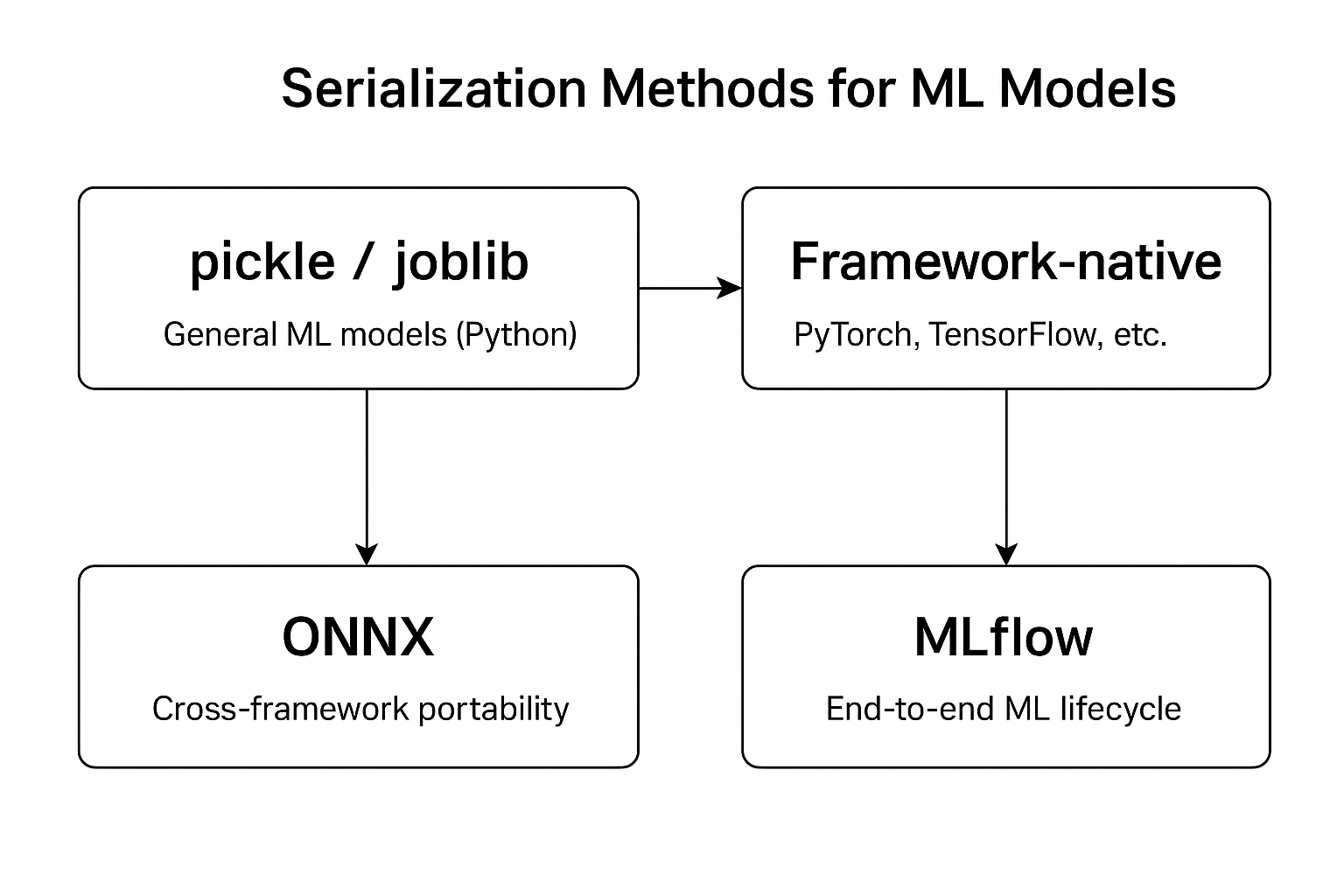

Serialization

The process of converting an in-memory object (e.g., a Python object) into a storable or transferable format (such as JSON, binary, or a file) so it can be saved or shared.

import json

data = {"name": "Ganesh", "course": "MLOps"}

# Serialization → Python dict → JSON string

serialized = json.dumps(data)

## Store the serialized data into JSON file if needed.

Deserialization

The process of converting the stored or transferred data (JSON, binary, file, etc.) back into an in-memory object that your program can use.

# Load it from JSON file

# Deserialization → JSON string → Python dict

deserialized = json.loads(serialized)

#serialization #deserialization #overfitting #underfitting

[Avg. reading time: 3 minutes]

Model vs Library vs Framework

python -m venv .demomodel

source .demomodel/bin/activate

pip install scikit-learn joblib

from sklearn.linear_model import LinearRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, r2_score

from joblib import dump, load

import numpy as np

# Fake dataset: study_hours -> exam_score

rng = np.random.default_rng(42)

hours = rng.uniform(0, 10, size=100).reshape(-1, 1) # feature X

noise = rng.normal(0, 5, size=100) # noise

scores = 5 + 8*hours.ravel() + noise # target y

X_train, X_test, y_train, y_test =

train_test_split(hours, scores, test_size=0.2, random_state=42)

model = LinearRegression()

# Train (fit)

model.fit(X_train, y_train)

# Evaluate

pred = model.predict(X_test)

print("MSE:", mean_squared_error(y_test, pred))

print("R2 :", r2_score(y_test, pred))

print("Learned slope and intercept:", model.coef_[0], model.intercept_)

# Save

dump(model, "linear_hours_to_score.joblib")

# Inference on new data

new_hours = np.array([[2.0], [5.0], [9.0]])

print("Predicted scores:", model.predict(new_hours))

# Predict after load

restored = load("linear_hours_to_score.joblib")

print("Loaded model predicts:", restored.predict(new_hours))

Fun Task

- Identify the Algorithj, Library, Model in this code

- What is MSE, R2_Score

- What is .joblib

- What is the number 42?

#model #library #framework #r2score #mse

[Avg. reading time: 2 minutes]

Explanation

-

Library: scikit-learn

-

Algorithm: Linear Regression (Mathematics)

-

Prebuilt Model: LinearRegression (part of scikit-learn library)

-

model.fit(): Custom built model for this data.

-

42 answer to the ultimate question of Life, the Universe, and Everything.

-

model.coef_[0] → the slope learned from data How much the target (exam score) increases for 1 extra unit of study hours model.intercept_ → the intercept The predicted target value when study hours = 0

Example:

Learned slope and intercept: 8.1 4.9

8.1 * hrs + 4.9

If a student studies 0 hours, predicted score ≈ 4.9 (baseline knowledge).

If a student studies 5 hours, predicted score ≈ 8.1 × 5 + 4.9 = 45.4.

[Avg. reading time: 3 minutes]

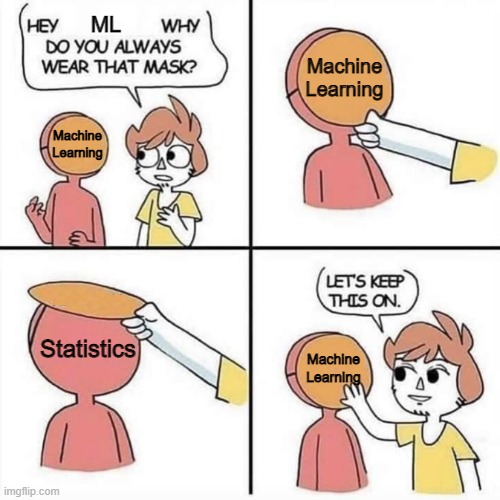

Statistical vs ML Models

Statistical Models

- Focus on inference -> understanding relationships between variables.

- Assume an underlying distribution (e.g., linear, normal).

- Typically work well with smaller datasets.

Goal: test hypotheses, estimate parameters.

Example: Linear regression to explain how income depends on education, experience, etc.

Machine Learning Models

- Focus on prediction -> finding patterns that generalize to unseen data.

- Fewer assumptions about data distribution.

- Can handle very large datasets and high-dimensional data.

Goal: optimize predictive performance.

Example: Random Forest predicting whether a customer will churn.

Key Similarities

Both use data to build models.

Both rely on training (fit) and evaluation (test).

Overlaps: linear regression is both a statistical model and an ML model, depending on context.

Book worth reading

The Manga Guide to Linear Algebra.

https://www.amazon.com/dp/1593274130

(Not an affiliate or referral)

On a lighter note

#statistics #ml #linearalgebra

[Avg. reading time: 3 minutes]

Types of ML Models

Supervised Learning

Data has input features (X) and target labels (y).

Model learns mapping: f(X) → y.

Examples:

- Regression -> Predicting house prices, demand forecast, server usage.

- Classification -> Spam vs Non-spam email or Customer churn.

Unsupervised Learning

Data has inputs only, no labels.

Goal: find hidden patterns or structure.

Examples:

- Clustering -> Customer segmentation.

- Association Rules -> Market basket analysis (“people who buy X also buy Y”).

- Dimensionality Reduction -> Principal Component Analysis (PCA) for visualization.

- Taking a high dimensional data and reducing it to fewer dimensions.

Reinforcement Learning (RL)

Agent interacts with environment -> learns by trial and error.

Used for decision-making & control.

Examples:

- Robotics & self-driving cars.

- Newer Video Games.

- OTT Content recommendations.

- Ads.

Semi-Supervised Learning

Mix of few labeled + many unlabeled data points.

Often used in NLP and computer vision.

Example: labeling 1,000 medical images, then using 100,000 unlabeled ones to improve model.

[Avg. reading time: 2 minutes]

ML Lifecycle

Collect Data (Data Engineers Role)

- Gather raw data from systems (databases, APIs, sensors, logs).

- Ensure sources are reliable and updated.

Clean & Prepare

- Handle missing values, outliers, and noise.

- Feature engineering: create new features, scale/encode as needed.

- Data splitting (train/validation/test).

Train Model

- Choose algorithm (supervised, unsupervised, reinforcement, etc.).

- Train on training set, tune hyperparameters.

Evaluate

- Use appropriate metrics:

- Classification → Accuracy, Precision, Recall, F1.

- Regression → RMSE, MAE, R².

- Cross-validation for robustness.

Deploy

- Make model accessible via API, batch jobs, or embedded in applications.

- Consider scaling (cloud, containers, edge devices).

Monitor & Improve

- Track data drift, concept drift, and model performance decay.

- Automate retraining pipelines (MLOps).

- Capture feedback loop to improve features and models.

#collect #clean #train #evaluate

[Avg. reading time: 6 minutes]

Data Preparation

~80% of the time in ML projects is spent on Data Preparation & Cleaning, and ~20% on Model Training.

The process of making raw data accurate, complete, and structured so it can be used for model training.

Wait data cleaning is not ML engineers job, it belongs to Data Engineer.

True but..

Data Engineers focus on collection and validation at scale:

- Ingest raw data from source systems (databases, APIs, IoT, logs).

- Build ETL/ELT pipelines (Bronze → Silver → Gold).

- Ensure data quality checks (avoid duplicates, schema validation, type checks, primary key uniqueness).

- Handle big data infrastructure: Spark, Databricks, Airflow, Kafka.

- Deliver curated data (often “Silver” or “Gold” layer) for downstream ML.

ML Engineers / Data Scientists take over once curated data is available:

- Apply ML-specific cleaning & prep:

- Impute missing values intelligently (mean/median/model-based).

- Encode categorical variables (one-hot, embeddings).

- Normalize/standardize numeric features.

- Text normalization, tokenization, embeddings.

- Create features meaningful to the ML model.

- Split data into train/validation/test sets.

flowchart LR

DE[**Data Engineer**<br/><br/>- ETL/ELT Pipelines<br/>- Schema Validation<br/>• Deduplication<br/>- Type Checks]

OVERLAP[**Common** <br/><br/>- Remove Duplicates<br/>- Ensure Consistency]

MLE[**ML Engineer**<br/><br/>-Handle Missing Values<br/>- Feature Scaling<br/>- Imputation<br/>- Encoding & Embeddings<br/>- Train/Val/Test Split]

DE --> OVERLAP

MLE --> OVERLAP

For Example

Tabular Data

Data Engineer: ensures no duplicate customer IDs in database.

ML Engineer: fills missing “Age” values with median, scales “Income”.

Text Data

Data Engineer: stores raw customer reviews as UTF-8 encoded text.

ML Engineer: lowercases, removes stopwords, converts to embeddings.

Image Data

Data Engineer: validates images aren’t corrupted on ingest.

ML Engineer: resizes images, normalizes pixel values.

[Avg. reading time: 6 minutes]

Data Cleaning

Check for Target Leakage

What it is: Features that give away the answer (future info in training data).

Why it matters: Makes the model look perfect in training but useless in production.

Example:

When building a model having this column is not correct as in Production you will never have this during Prediction. This can be used when Testing your model prediction.

refund_issued_flag when predicting “Will this order be refunded?”.

Validate Labels

What it is: Make sure labels are correct, consistent, and usable.

Why it matters: Garbage labels = garbage predictions.

Example:

Churn column has values: yes, Y, 1, true.

Normalize to 1 = churn, 0 = not churn.

Handle Outliers Intentionally

What it is: Extreme values that distort training.

Why it matters: “Emp_Salary = 10,000,000” can throw off predictions.

Example

Cap at 99th percentile.

Flag as anomaly instead of training on it.

Enforce Feature Types

What it is: Make sure data types match their meaning.

Why it matters: Models can’t learn if types are wrong.

Example:

customer_id stored as integer → model may treat it as numeric.

Why is that problem, customer_id = 20 will have more weightage than customer_id = 1

Convert to string (categorical).

Standardize Categories

What it is: Inconsistent labels in categorical columns.

Why it matters: Model may treat the same thing as different classes.

Example:

Country: USA, U.S.A., United States.

Map all to United States.

Normalize Text for ML

What it is: Clean and standardize text features.

Why it matters: Prevents the model from treating “Hello” and “hello!” as different.

Example:

Lowercasing, removing punctuation, stripping whitespace.

Keep a copy of raw text for audit.

Protect Data Splits

What it is: Make sure related rows don’t leak between train/test.

Why it matters: Prevents unfair accuracy boost.

Example:

Same student appears in both train and test sets.

Fix: Group by student_id when splitting.

#datacleaning #mlcleaning #normalize_data

[Avg. reading time: 11 minutes]

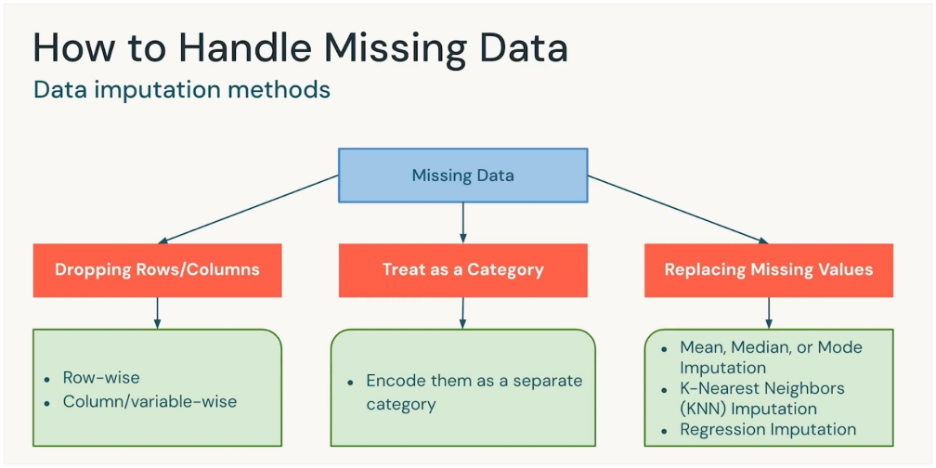

Data Imputation

Data Imputation is the process of filling in missing values in a dataset with estimated or predicted values.

Data imputation aims to enhance the quality and completeness of the dataset, ultimately improving the performance and reliability of the ML model.

Problems with Missing Data

- Reduced Model

- Biased Inferences

- Imbalanced Representations

- Increased complexity in Model handling

Data Domain knowledge is important before choosing the right method.

Dropping Rows/Columns

Remove the rows or columns that contain missing values.

- If the percentage of missing data is very small.

- If the column isn’t important for the model.

Example: Drop the few rows out of Million where “Age” is missing.

Treat as a Category

Encode “missing” or “NA” or “Unknown” as its own category.

- For categorical variables (like Country, Gender, Payment Method).

When “missing” itself carries meaning (e.g., customer didn’t provide income → may be sensitive).

Example: Add a category Unknown to “Marital Status” column.

Data with Missing Values

| ID | Country |

|---|---|

| 1 | USA |

| 2 | Canada |

| 3 | Null |

| 4 | India |

| 5 | NA (missing) |

After treating as a Category

| ID | Country |

|---|---|

| 1 | USA |

| 2 | Canada |

| 3 | Missing |

| 4 | India |

| 5 | Missing |

The model will see “Missing” as just another value like “USA” or “India.”

Replacing Missing Values (Imputation)

Fill missing values with a reasonable estimate.

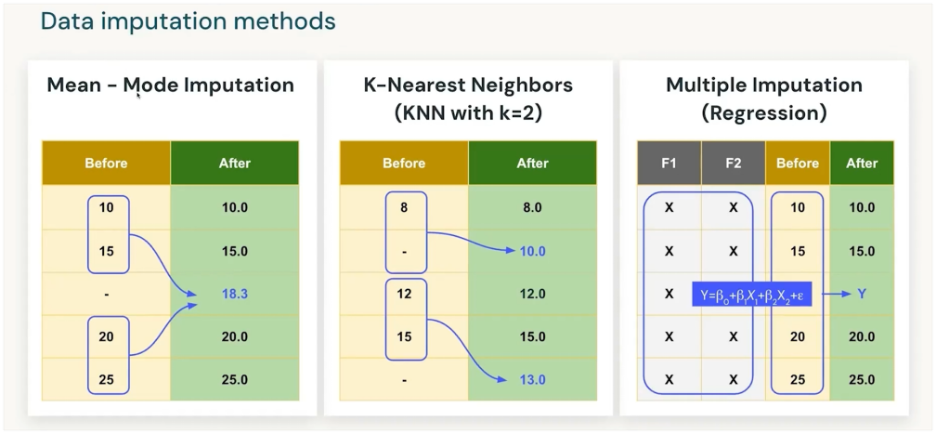

Methods:

- Mean/Median/Mode: Quick fixes for numeric/categorical data.

- KNN Imputation: Fill value based on “closest” similar records.

- Regression Imputation: Predict the missing value using other features.

Example: Replace missing “Salary” with median salary of the group.

Using regression models repeatedly (with randomness) to fill missing data, producing several plausible datasets, and then combining them for analysis.

| Age | Education | Income |

|---|---|---|

| 30 | Masters | ? |

| 40 | PhD | 120K |

| 35 | Bachelors | 80K |

- Step 1: Fit regression: Income ~ Age + Education.

- Step 2: Predict missing Income for Age=30, Edu=Masters.

- Step 3: Add random noise → 95K in dataset1, 92K in dataset2, 98K in dataset3.

- Step 4: Analyze all 3 datasets, combine results.

Downside: Delay in process and computing time. More missing values more coputation time.

- Drop : if it’s tiny and negligible.

- Category : if it’s categorical.

- Replace : if it’s numeric and important.

- KNN/Regression : if you want smarter imputations and can afford compute.

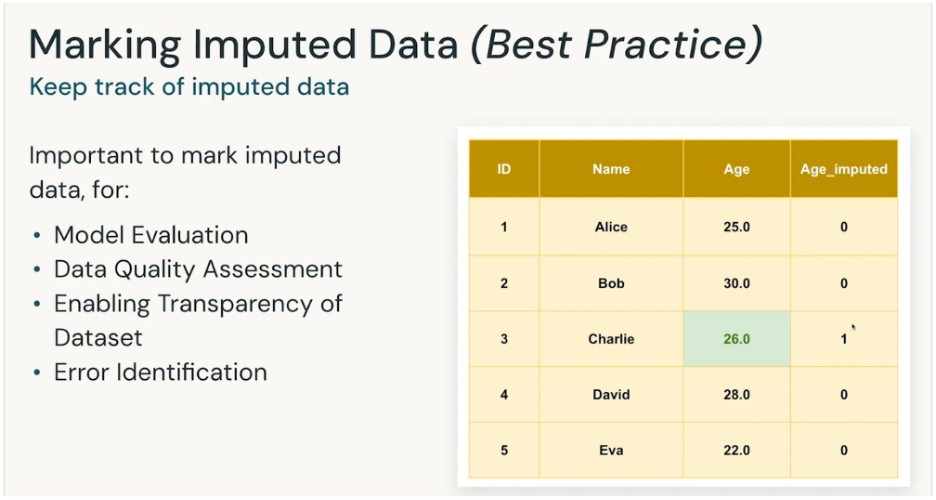

It is important to mark the imputated data

To know which data is from source and which is calculated. So its handled with pinch of salt.

| Method | When to Use | Pros | Cons |

|---|---|---|---|

| Drop Rows/Columns | When % of missing data is very small (e.g., <5%) or the feature is unimportant | - Simple and fast - No assumptions needed | - Lose data (rows) - Risk of losing valuable features (columns) |

| Treat as a Category | For categorical variables where “missing” may carry meaning | - Preserves all rows - Captures the “missingness” as useful info | - Only works for categorical data - Can create an artificial category if missing isn’t meaningful |

| Replace with Mean/Median/Mode | For numeric data (mean/median) or categorical (mode) | - Easy to implement - Keeps dataset size intact | - Distorts distribution - Ignores correlations between features |

| KNN Imputation | When dataset is not too large and similar neighbors make sense | - Considers relationships between features - More accurate than simple averages | - Computationally expensive - Sensitive to scaling and choice of K |

| Regression Imputation | When missing values can be predicted from other variables | - Uses feature relationships - Can be very accurate | - Risk of “overfitting” imputations - Adds complexity |

#dataimputation #knn #encode #dropdata

[Avg. reading time: 13 minutes]

Data Encoding

Data Encoding is the process of converting categorical data (like colors, countries, product types) into a numeric format that ML models can understand.

Unlike numerical data, categorical data is not directly usable because models operate on numbers, not labels.

Encoding ensures categorical values are represented in a way that preserves meaning and avoids misleading the model.

Typically rule-based.

Example: Products

| ID | Product |

|---|---|

| 1 | Laptop |

| 2 | Phone |

| 3 | Tablet |

| 4 | Phone |

Label Encoding

Assigns each category a unique integer.

| ID | Product (Encoded) |

|---|---|

| 1 | 0 |

| 2 | 1 |

| 3 | 2 |

| 4 | 1 |

Pros:

- Very simple, minimal storage.

- Works well for tree-based models.

Cons:

- Implies an order between categories (Laptop < Phone < Tablet).

- Misleads linear models.

One-Hot Encoding

Creates a binary column for each category.

| ID | Laptop | Phone | Tablet |

|---|---|---|---|

| 1 | 1 | 0 | 0 |

| 2 | 0 | 1 | 0 |

| 3 | 0 | 0 | 1 |

| 4 | 0 | 1 | 0 |

Pros:

- No ordinal assumption.

- Easy to interpret.

Cons:

- High dimensionality for many products (e.g., thousands of SKUs).

- Sparse data, more memory needed.

Ordinal Encoding

Encodes categories when they have a natural order.

Works for things like product size or version level.

Example (Product Tier):

| ID | Product Tier |

|---|---|

| 1 | Basic |

| 2 | Standard |

| 3 | Premium |

| 4 | Standard |

After Ordinal Encoding:

| ID | Product Tier (Encoded) |

|---|---|

| 1 | 1 |

| 2 | 2 |

| 3 | 3 |

| 4 | 2 |

Pros:

- Preserves rank/order.

- Efficient storage.

Cons:

- Only valid if order is real (Basic < Standard < Premium).

- Wrong if categories are unordered (Laptop vs Phone).

Target Encoding (Mean Encoding)

Replaces each category with the mean of the target variable.

Target - “Purchased” Yes=1, No=0

| ID | Product | Purchased |

|---|---|---|

| 1 | Laptop | 1 |

| 2 | Phone | 0 |

| 3 | Tablet | 1 |

| 4 | Phone | 1 |

| ID | Product (Encoded) | Purchased |

|---|---|---|

| 1 | 1.0 | 1 |

| 2 | 0.5 | 0 |

| 3 | 1.0 | 1 |

| 4 | 0.5 | 1 |

Compute mean purchase rate:

Laptop = 1.0 Phone = 0.5 Tablet = 1.0

Pros:

- Great for high-cardinality features (e.g., hundreds of product SKUs).

- Often improves accuracy.

- Keeps dataset compact (just 1 numeric column).

- Often boosts performance in models like Logistic Regression or Gradient Boosted Trees.

Cons:

- Risk of data leakage if target encoding is done on the whole dataset.

- Must use cross-validation to avoid leakage.

- Compute intensive.

| Encoding Type | Best For | Avoid When |

|---|---|---|

| Label Encoding | Tree-based models, low-cardinality products | Linear models, unordered categories |

| One-Hot Encoding | General ML, few product categories | Very high-cardinality features |

| Ordinal Encoding | Ordered categories (tiers, sizes, versions) | Unordered categories (Phone vs Laptop) |

| Target Encoding | High-cardinality products, with proper CV | Without CV (leakage risk) |

Multiple Categorical Columns

| ID | Product | Product Tier | Category | Purchased |

|---|---|---|---|---|

| 1 | Laptop | Premium | PC | 1 |

| 2 | Phone | Basic | Mobile | 0 |

| 3 | Tablet | Standard | Electronics | 1 |

| 4 | Phone | Premium | Mobile | 1 |

- Product: Laptop, Phone, Tablet

- Product Tier: Basic < Standard < Premium (ordered)

- Category: Electronics, Accessories, Clothing (unordered)

Label Encoding (all columns)

Replace each category with an integer.

| ID | Product | Product Tier | Category |

|---|---|---|---|

| 1 | 0 | 2 | 0 |

| 2 | 1 | 0 | 1 |

| 3 | 2 | 1 | 2 |

| 4 | 1 | 2 | 1 |

Artificial order created (e.g., PC=0, Mobile=1, Electronics=2).

One-Hot Encoding (all columns)

| ID | Laptop | Phone | Tablet | Tier_Basic | Tier_Standard | Tier_Premium | Cat_PC | Cat_Mobile | Cat_Electronics |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 |

| 2 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 3 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 |

| 4 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 |

Very interpretable, but column explosion if you have 50+ products or 100+ categories.

Mixed Encoding (best practice)

- Product → One-Hot (few categories).

- Product Tier → Ordinal (Basic=1, Standard=2, Premium=3).

- Category → One-Hot (PC, Mobile, Electronics).

| ID | Laptop | Phone | Tablet | Tier (Ordinal) | Cat_PC | Cat_Mobile | Cat_Electronics |

|---|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 0 | 3 | 1 | 0 | 0 |

| 2 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

| 3 | 0 | 0 | 1 | 2 | 0 | 0 | 1 |

| 4 | 0 | 1 | 0 | 3 | 0 | 1 | 0 |

#onehot_encoding #target_encoding #label_encoding

[Avg. reading time: 6 minutes]

Feature Engineering

The process of transforming raw data into more informative inputs (features) for ML models.

Goes beyond encoding: you can create new features/metrics (like derived columns in the DB world) that pure encoding does not offer.

The goal of FE is to improve model accuracy, interpretability, and generalization.

Example (Laptop Sales):

Purchase Date = 2025-09-02

Derived Features:

- Month = 09

- DayOfWeek = Tuesday

- IsHolidaySeason = No

- IsWeekend = No

- IsLeapYear= No

- Quarter = Q3

Encoding (One-Hot, Label, Target) = only turns categories into numbers.

But real-world data often hides useful patterns in dates, interactions, domain knowledge, or semantics.

| ID | Product | Purchase Date | Price | PurchasedAgain |

|---|---|---|---|---|

| 1 | Laptop | 2023-12-01 | 1200 | 1 |

| 2 | Laptop | 2024-07-15 | 1100 | 0 |

| 3 | Phone | 2024-05-20 | 800 | 1 |

| 4 | Tablet | 2024-08-05 | 600 | 1 |

- Encoding only handles Product → One-Hot or Target.

Feature Engineering adds new insights:

- From Purchase Date: extract Month, DayOfWeek, IsHolidaySeason.

- From Price: create Discounted? (if < avg product price).

- Combine features: Price / AvgCategoryPrice.

Basic Feature Engineering

Improve signals/patterns without domain-specific knowledge.

Scaling/Normalization: Price → (Price – mean) / std

Date/Time Features: Purchase Date → Month=12, DayOfWeek=Friday

Polynomial/Interaction: Price × Tier

Pros:

- Easy to implement.

- Immediately boosts many models (especially linear/Neural Networks).

Cons:

- Risk of adding noise if done blindly.

- Limited unless combined with domain insights.

Domain-Specific Feature Engineering

Apply business/field knowledge.

Examples:

Finance: Debt-to-Income Ratio, Credit Utilization %

Healthcare: BMI = Weight / Height², risk score categories

IoT: Rolling averages, peak detection in sensor data.

Pros:

- Captures real-world meaning → big performance gains.

- Makes models explainable to stakeholders.

Cons:

- Requires domain expertise.

- Not always transferable between datasets.

#feature_engineering #domain_specific

[Avg. reading time: 10 minutes]

Vectors

A vector is just an ordered list of numbers that represents a data point so models can do math on it.

Think “row -> numbers” for tabular data, or “text/image -> numbers” after a transformation.

Example:

Price = 1200, Weight = 2kg, Warranty = 24 months → Vector = [1200, 2, 24]

Types of Vectors

Tabular Feature Vector

Concatenate numeric columns (and encoded categoricals) into a single vector.

ML engineer/data scientist during data prep/FE (training) and the same code at inference.

Example: [Price, Weight, Warranty] → [1200, 2, 24].

Sparse Vectors

High-dimensional vectors with many zeros (e.g., One-Hot, Bag-of-Words, TF-IDF).

Encoding/featurization function in your pipeline.

Example

Products = {Laptop, Phone, Pen}

Laptop → [1, 0, 0]

Phone → [0, 1, 0]

Pen → [0, 0, 1]

Dense Vectors (compact, mostly non-zeros)

Lower-dimensional, compact numeric representation

Created by algorithms (scalers/PCA) or models (embeddings) in your pipeline.

Lower-dimensional, compact, mostly non-zeros → dense.

Example: Not actual values

Laptop → [0.65, -0.12, 0.48]

Phone → [0.60, -0.15, 0.52]

Pen → [0.10, 0.85, -0.40]

Laptop and Phone vectors are close together.

Model-Derived Feature Vectors

Dense vectors specifically generated by models like CNN/Transformer as a vector. Mainly used with Computer Vision. Image classification, object detection, face recognition, voice processing.

Models generate them during feature extraction (training & inference).

Example: BERT sentence vector, ResNet image features.

| Vector Type | Who designs it? | Who computes it? | When it’s computed | Example |

|---|---|---|---|---|

| Tabular feature vector | ML Eng/DS (choose columns) | Pipeline code | Train & Inference | [Price, Weight, Warranty] |

| Sparse (One-Hot/TF-IDF) | ML Eng/DS (choose encoder) | Encoder in pipeline | Train (fit) & Inference (transform) | One-Hot Product |

| Dense (scaled/PCA) | ML Eng/DS (choose scaler/PCA) | Scaler/PCA in pipeline | Train (fit) & Inference (transform) | StandardScaled price, PCA(100) |

| Model features / Embeddings | ML Eng/DS (choose model) | Model (pretrained or trained) | Train & Inference | BERT/ResNet/categorical embedding |

MLOps ensures the same steps run at inference to avoid train/serve skew.

Example of Dense Vector

python -m venv .densevector

source .densevector/bin/activate

pip install sentence-transformers

from sentence_transformers import SentenceTransformer

# Load a pre-trained model (MiniLM is small & fast)

model = SentenceTransformer('all-MiniLM-L6-v2')

text = "Laptop"

# Convert text into dense vector

vector = model.encode(text)

print("Dense Vector Shape:", text, vector.shape)

print("Dense Vector (first 10 values):", vector[:10])

from sentence_transformers import SentenceTransformer

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

# Load model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Words

texts = ["Laptop", "Computer", "Pencil"]

# Encode all

vectors = model.encode(texts)

# Convert to numpy array

vectors = np.array(vectors)

# Cosine similarity matrix

sim_matrix = cosine_similarity(vectors)

# Display similarity scores

for i in range(len(texts)):

for j in range(i+1, len(texts)):

print(f"Similarity({texts[i]} vs {texts[j]}): {sim_matrix[i][j]:.4f}")

#vectors #densevector #sparsevector #tabularvector

[Avg. reading time: 8 minutes]

Embeddings

Embeddings transform high-dimensional categorical or textual data into a compact, dense vector space.

Similar items are placed closer together in vector space -> models can understand similarity.

- These representations capture relationships and context among different entities.

- Used in Recommendation Systems, NLP, Image Search and more.

- Can be learning from data using neural networks or retrieved from pretrained models (eg: Word2Vec, FastText)

Use Cases

- Search & Retrieval: Semantic search, image search.

- NLP: Word/sentence embeddings for sentiment, chatbots, translation.

- Computer Vision: Image embeddings for similarity or classification.

Advantages over traditional encoding:

- Handle high-cardinality categorical features (e.g., millions of products).

- Capture context and semantics (“Laptop” is closer to “Computer” than “Pencil”).

- Lower-dimensional → more efficient than One-Hot or TF-IDF.

Types of Embeddings

Word Embeddings (Text)

Represent words as vectors so that semantically similar words are close together.

Examples: Word2Vec, GloVe, FastText.

“king” – “man” + “woman” = “queen”

Used in: sentiment analysis, translation, chatbots.

Sentence / Document Embeddings (Text)

Represent longer text (sentences, paragraphs, docs) in vector form.

Capture context and meaning beyond individual words.

Examples: BERT, Sentence-BERT, Universal Sentence Encoder.

“The laptop is fast” and “This computer is quick” → close vectors.

Image Embeddings (Computer Vision)

Represent images as vectors extracted from CNNs or Vision Transformers.

Capture visual similarity (shapes, colors, objects).

Examples: ResNet, CLIP (image+text).

A cheetah photo and a leopard photo → embeddings close together (both cat family).

Used in: image search, face recognition, object detection.

Audio / Speech Embeddings

Convert audio waveforms into dense vectors capturing phonetics and semantics.

Examples: wav2vec, HuBERT.

Voice saying “Laptop” → embedding close to text embedding of “Laptop”.

Used in: speech recognition, speaker identification.

Graph Embeddings

Represent nodes/edges in a graph (social networks, knowledge graphs).

Capture relationships and network structure.

Examples: Node2Vec, DeepWalk, Graph Neural Networks (GNNs).

In a product graph, Laptop node embedding will be close to Mouse if often co-purchased.

| Type | Example Algorithms | Data Type | Use Cases |

|---|---|---|---|

| Word | Word2Vec, GloVe | Text (words) | NLP basics |

| Sentence/Doc | BERT, SBERT | Text (longer) | Semantic search, QA |

| Categorical | Embedding layers | Tabular (IDs) | Recommenders, fraud detection |

| Image | ResNet, CLIP | Vision | Image search, recognition |

| Audio | wav2vec, HuBERT | Audio | Speech-to-text, voice auth |

| Graph | Node2Vec, GNNs | Graphs | Social networks, KG search |

#embeddings [#<abbr title="Bidirectional Encoder Representations from Transformers">BERT</abbr>](../tags.md#BERT "Tag: BERT") #Word2Vec #NLP

[Avg. reading time: 7 minutes]

Life Before MLOps

Challenges Faced by ML Teams.

Moving Models from Dev → Staging → Prod

Models were often shared as .pkl or joblib files, passed around manually.

Problem: Dependency mismatches (Python, sklearn version), fragile handoffs.

Stopgap: Packaging models with Docker images, but still manual and inconsistent.

Champion vs Challenger Deployment

Teams struggled to test a new (challenger) model against the current (champion).

Problem: No controlled A/B testing or shadow deployments → risky rollouts.

Stopgap: Manual canary releases or running offline comparisons.

Model Versioning Confusion

Models saved as model_final.pkl, model_final_v2.pkl, final_final.pkl.

Problem: Nobody knew which version was truly in production.

Stopgap: Git or S3 versioning for files, but no link to experiments/data.

Inference on Wrong Model Version

Even if multiple versions existed, production systems sometimes pointed to the wrong one.

Problem: Silent failures, misaligned experiments vs prod results.

Stopgap: Hardcoding file paths or timestamps — brittle and error-prone.

Train vs Serve Skew (Data-Model Mismatch)

Preprocessing done in notebooks was re-written differently in prod code.

Problem: Same model behaves differently in production.

Stopgap: Copy-paste code snippets, but no guarantee of sync.

Experiment Tracking Chaos

Results scattered across notebooks, Slack messages, spreadsheets.

Problem: Couldn’t reproduce “that good accuracy we saw last week.”

Stopgap: Manually logging metrics in Excel or text files.

Reproducibility Issues

Same code/data gave different results on different machines.

Problem: No control of data versions, package dependencies, or random seeds.

Stopgap: Virtualenvs, requirements.txt — helped a bit but not full reproducibility.

Lack of Monitoring in Production

Once deployed, no one knew if the model degraded over time.

Problem: Models silently failed due to data drift or concept drift.

Stopgap: Occasional manual performance checks, but no automation.

Scaling & Performance Gaps

Models trained in notebooks failed under production loads.

Problem: Couldn’t handle large-scale data or real-time inference.

Stopgap: Batch scoring jobs on cron — but too slow for real-time use cases.

Collaboration Breakdowns

Data Scientists, Engineers, Ops worked in silos.

Problem: Miscommunication -> wrong datasets, broken pipelines, delays.

Stopgap: Jira tickets and handovers — but still slow and error-prone.

Governance & Compliance Gaps

No audit trail of which model made which prediction.

Problem: Risky for regulated domains (finance, healthcare).

Stopgap: Manual logging of predictions — incomplete and unreliable.

#mlops #development #production

[Avg. reading time: 13 minutes]

Quiz

Note: This is a practice quiz and will not be graded. The purpose is to help you check your understanding of the concepts we covered.

[Avg. reading time: 0 minutes]

Developer Tools

[Avg. reading time: 5 minutes]

Introduction

Before diving into Data or ML frameworks, it's important to have a clean and reproducible development setup. A good environment makes you:

- Faster: less time fighting dependencies.

- Consistent: same results across laptops, servers, and teammates.

- Confident: tools catch errors before they become bugs.

A consistent developer experience saves hours of debugging. You spend more time solving problems, less time fixing environments.

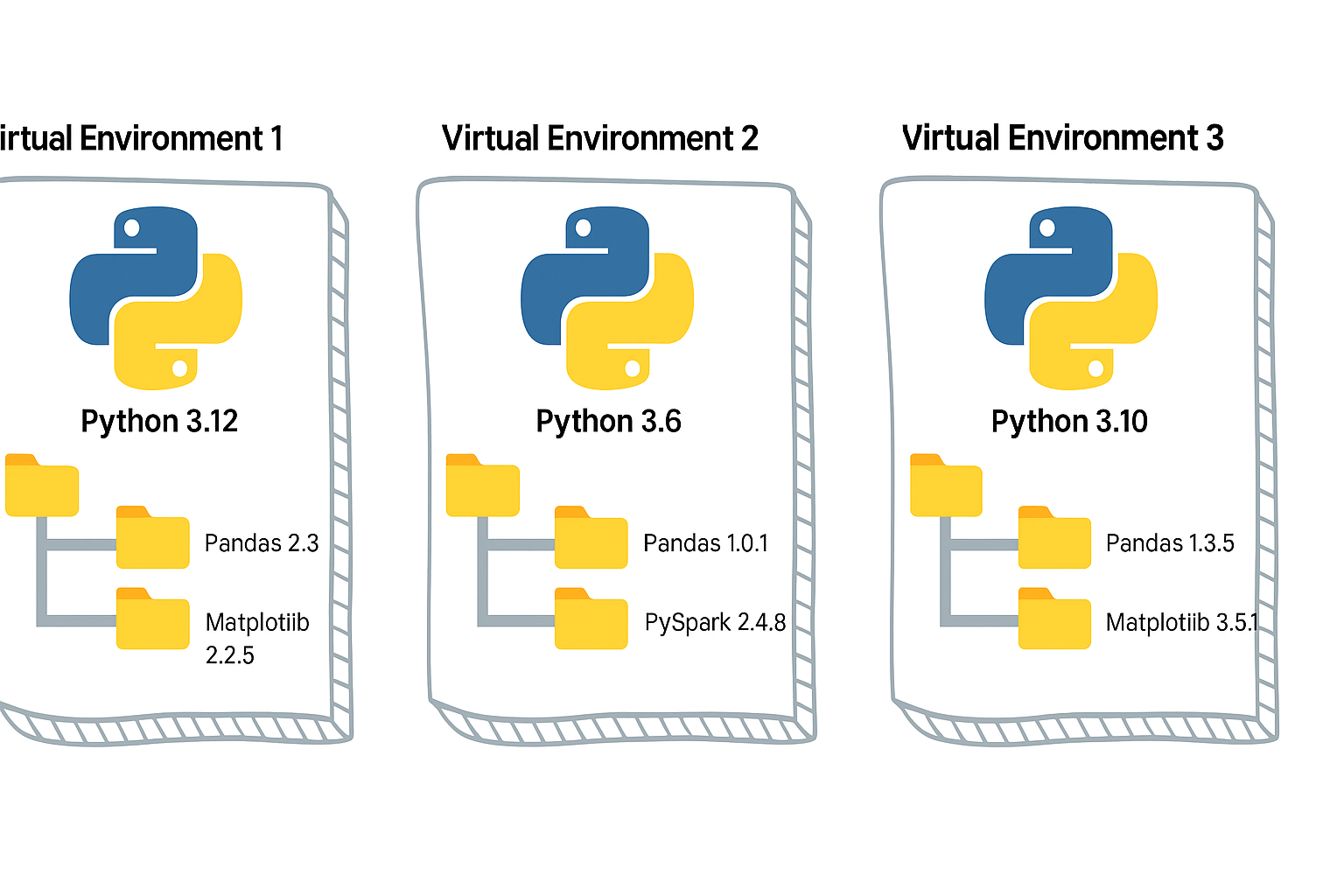

Python Virtual Environment

- A virtual environment is like a sandbox for Python.

- It isolates your project’s dependencies from the global Python installation.

- Easy to manage different versions of library.

- Must depend on requirements.txt, it has to be managed manually.

Without it, installing one package for one project may break another project.

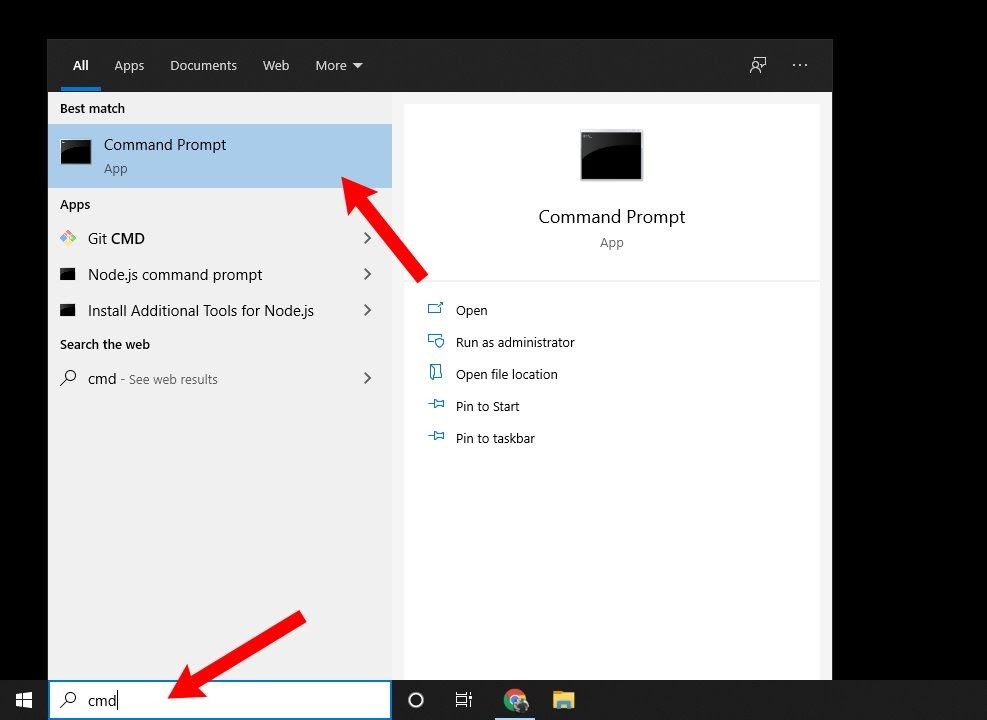

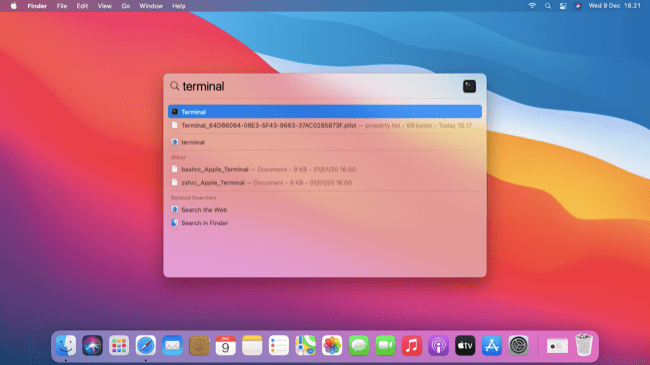

Open the CMD prompt (Windows)

Open the Terminal (Mac)

# Step 0: Create a project folder under your Home folder.

mkdir project

cd project

# Step 1: Create a virtual environment

python -m venv myenv

# Step 2: Activate it

# On Mac/Linux:

source myenv/bin/activate

# On Windows:

myenv\Scripts\activate.bat

# Step 3: Install packages (they go inside `myenv`, not global)

pip install faker

# Step 4: Open Python

python

# Step 5: Verify

import sys

sys.prefix

sys.base_prefix

# Step 6: Run this sample

from faker import Faker

fake = Faker()

fake.name()

# Step 6: Close Python (Control + Z)

# Step 7: Deactivate the venv when done

deactivate

As a next step, you can either use Poetry or UV as your package manager.

#venv #python #uv #poetry developer_tools

[Avg. reading time: 3 minutes]

UV

Dependency & Environment Manager

- Written in Rust.

- Syntax is lightweight.

- Automatic Virtual environment creation.

Create a new project:

# Initialize a new uv project

uv init uv_helloworld

Sample layout of the directory structure

.

├── main.py

├── pyproject.toml

├── README.md

└── uv.lock

# Change directory

cd uv_helloworld

# # Create a virtual environment myproject

# uv venv myproject

# or create a UV project with specific version of Python

# uv venv myproject --python 3.11

# # Activate the Virtual environment

# source myproject/bin/activate

# # Verify the Virtual Python version

# which python3

# add library (best practice)

uv add faker

# verify the list of libraries under virtual env

uv tree

# To find the list of libraries inside Virtual env

uv pip list

edit the main.py

from faker import Faker

fake = Faker()

print(fake.name())

uv run main.py

Read More on the differences between UV and Poetry

[Avg. reading time: 12 minutes]

Python Developer Tools

PEP

PEP, or Python Enhancement Proposal, is the official style guide for Python code. It provides conventions and recommendations for writing readable, consistent, and maintainable Python code.

- PEP 8 : Style guide for Python code (most famous).

- PEP 20 : "The Zen of Python" (guiding principles).

- PEP 484 : Type hints (basis for MyPy).

- PEP 517/518 : Build system interfaces (basis for pyproject.toml, used by Poetry/UV).

- PEP 572 : Assignment expressions (the := walrus operator).

- PEP 695 : Type parameter syntax for generics (Python 3.12).

Key Aspects of PEP 8 (Popular ones)

Indentation

- Use 4 spaces per indentation level

- Continuation lines should align with opening delimiter or be indented by 4 spaces.

Line Length

- Limit lines to a maximum of 79 characters.

- For docstrings and comments, limit lines to 72 characters.

Blank Lines

- Use 2 blank lines before top-level functions and class definitions.

- Use 1 blank line between methods inside a class.

Imports

- Imports should be on separate lines.

- Group imports into three sections: standard library, third-party libraries, and local application imports.

- Use absolute imports whenever possible.

# Correct

import os

import sys

# Wrong

import sys, os

Naming Conventions

- Use

snake_casefor function and variable names. - Use

CamelCasefor class names. - Use

UPPER_SNAKE_CASEfor constants. - Avoid single-character variable names except for counters or indices.

Whitespace

- Don’t pad inside parentheses/brackets/braces.

- Use one space around operators and after commas, but not before commas.

- No extra spaces when aligning assignments.

Comments

- Write comments that are clear, concise, and helpful.

- Use complete sentences and capitalize the first word.

- Use # for inline comments, but avoid them where the code is self-explanatory.

Docstrings

- Use triple quotes (""") for multiline docstrings.

- Describe the purpose, arguments, and return values of functions and methods.

Code Layout

- Keep function definitions and calls readable.

- Avoid writing too many nested blocks.

Consistency

- Consistency within a project outweighs strict adherence.

- If you must diverge, be internally consistent.

Linting

Linting is the process of automatically checking your Python code for:

-

Syntax errors

-

Stylistic issues (PEP 8 violations)

-

Potential bugs or bad practices

-

Keeps your code consistent and readable.

-

Helps catch errors early before runtime.

-

Encourages team-wide coding standards.

# Incorrect

import sys, os

# Correct

import os

import sys

# Bad spacing

x= 5+3

# Good spacing

x = 5 + 3

Ruff : Linter and Code Formatter

Ruff is a fast, modern tool written in Rust that helps keep your Python code:

- Consistent (follows PEP 8)

- Clean (removes unused imports, fixes spacing, etc.)

- Correct (catches potential errors)

Install

poetry add ruff

uv add ruff

Verify

ruff --version

ruff --help

example.py

import os, sys

def greet(name):

print(f"Hello, {name}")

def message(name): print(f"Hi, {name}")

def calc_sum(a, b): return a+b

greet('World')

greet('Ruff')

message('Ruff')

poetry run ruff check example.py

poetry run ruff check example.py --fix

poetry run ruff format example.py --check

poetry run ruff format example.py

OR

uv run ruff check example.py

uv run ruff check example.py --fix

uv run ruff format example.py --check

uv run ruff check example.py

MyPy : Type Checking Tool

mypy is a static type checker for Python. It checks your code against the type hints you provide, ensuring that the types are consistent throughout the codebase.

It primarily focuses on type correctness—verifying that variables, function arguments, return types, and expressions match the expected types.

Install

poetry add mypy

or

uv add mypy

or

pip install mypy

sample.py

x = 1

x = 1.0

x = True

x = "test"

x = b"test"

print(x)

def add(a: int, b: int) -> int:

return a + b

print(add(100, 123))

print(add("hello", "world"))

uv run mypy sample.py

or

poetry run mypy sample.py

or

mypy sample.py

[Avg. reading time: 8 minutes]

Error Handling

Python uses try/except blocks for error handling.

The basic structure is:

try:

# Code that may raise an exception

except ExceptionType:

# Code to handle the exception

finally:

# Code executes all the time

Uses

Improved User Experience: Instead of the program crashing, you can provide a user-friendly error message.

Debugging: Capturing exceptions can help you log errors and understand what went wrong.

Program Continuity: Allows the program to continue running or perform cleanup operations before terminating.

Guaranteed Cleanup: Ensures that certain operations, like closing files or releasing resources, are always performed.

Some key points

-

You can catch specific exception types or use a bare except to catch any exception.

-

Multiple except blocks can be used to handle different exceptions.

-

An else clause can be added to run if no exception occurs.

-

A finally clause will always execute, whether an exception occurred or not.

Without Try/Except

x = 10 / 0

Basic Try/Except

try:

x = 10 / 0

except ZeroDivisionError:

print("Error: Division by zero!")

Generic Exception

try:

file = open("nonexistent_file.txt", "r")

except:

print("An error occurred!")

Find the exact error

try:

file = open("nonexistent_file.txt", "r")

except Exception as e:

print(str(e))

Raise - Else and Finally

try:

x = -10

if x <= 0:

raise ValueError("Number must be positive")

except ValueError as ve:

print(f"Error: {ve}")

else:

print(f"You entered: {x}")

finally:

print("This will always execute")

try:

x = 10

if x <= 0:

raise ValueError("Number must be positive")

except ValueError as ve:

print(f"Error: {ve}")

else:

print(f"You entered: {x}")

finally:

print("This will always execute")

Nested Functions

def divide(a, b):

try:

result = a / b

return result

except ZeroDivisionError:

print("Error in divide(): Cannot divide by zero!")

raise # Re-raise the exception

def calculate_and_print(x, y):

try:

result = divide(x, y)

print(f"The result of {x} divided by {y} is: {result}")

except ZeroDivisionError as e:

print(str(e))

except TypeError as e:

print(str(e))

# Test the nested error handling

print("Example 1: Valid division")

calculate_and_print(10, 2)

print("\nExample 2: Division by zero")

calculate_and_print(10, 0)

print("\nExample 3: Invalid type")

calculate_and_print("10", 2)

[Avg. reading time: 4 minutes]

UnitTest

A unit test verifies the correctness of a small, isolated "unit" of code—typically a single function or method—independent of the rest of the program.

Key Benefits of Unit Testing

Isolates functionality – Tests focus on one unit at a time, making it easier to pinpoint where a bug originates.

Enables early detection – Issues are caught during development, reducing costly fixes later in production.

Prevents regressions – Running existing tests after changes ensures new bugs aren’t introduced.

Supports safe refactoring – With a strong test suite, developers can confidently update or restructure code.

Improves quality – High coverage enforces standards, highlights edge cases, and strengthens overall reliability.

Unit Testing in Python

Every language provides its own frameworks for unit testing. In Python, popular choices include:

unittest – The built-in testing framework in the standard library.

pytest – Widely used, simple syntax, rich plugin ecosystem.

doctest – Tests embedded directly in docstrings.

testify – An alternative framework inspired by unittest, with added features.

pytest is the popular testing tool for data/ML code. It’s faster to write, far more expressive for data-heavy tests, and has a rich plugin ecosystem that plays nicely with Spark, Pandas, MLflow, and CI.

git clone https://github.com/gchandra10/pytest-demo.git

uv run pytest -v

[Avg. reading time: 11 minutes]

DUCK DB

DuckDB is a single file built with no dependencies.

All the great features can be read here https://duckdb.org/

Automatic Parallelism: DuckDB has improved its automatic parallelism capabilities, meaning it can more effectively utilize multiple CPU cores without requiring manual tuning. This results in faster query execution for large datasets.

Parquet File Improvements: DuckDB has improved its handling of Parquet files, both in terms of reading speed and support for more complex data types and compression codecs. This makes DuckDB an even better choice for working with large datasets stored in Parquet format.

Query Caching: Improves the performance of repeated queries by caching the results of previous executions. This can be a game-changer for analytics workloads with similar queries being run multiple times.

How to use DuckDB?

Download the CLI Client

-

Linux).

-

For other programming languages, visit https://duckdb.org/docs/installation/

-

Unzip the file.

-

Open Command / Terminal and run the Executable.

DuckDB in Data Engineering

Download orders.parquet from

https://github.com/duckdb/duckdb-data/releases/download/v1.0/orders.parquet

More files are available here

https://github.com/cwida/duckdb-data/releases/

Open Command Prompt or Terminal

./duckdb

# Create / Open a database

.open ordersdb

Duckdb allows you to read the contents of orders.parquet as is without needing a table. Double quotes around the file name orders.parquet is essential.

describe table "orders.parquet"

Not only this, but it also allows you to query the file as-is. (This feature is similar to one data bricks supports)

select * from "orders.parquet" limit 3;

DuckDB supports CTAS syntax and helps to create tables from the actual file.

show tables;

create table orders as select * from "orders.parquet";

select count(*) from orders;

DuckDB supports parallel query processing, and queries run fast.

This table has 1.5 million rows, and aggregation happens in less than a second.

select now(); select o_orderpriority,count(*) cnt from orders group by o_orderpriority; select now();

DuckDB also helps to convert parquet files to CSV in a snap. It also supports converting CSV to Parquet.

COPY "orders.parquet" to 'orders.csv' (FORMAT "CSV", HEADER 1);Select * from "orders.csv" limit 3;

It also supports exporting existing Tables to Parquet files.

COPY "orders" to 'neworder.parquet' (FORMAT "PARQUET");

DuckDB supports Programming languages such as Python, R, JAVA, node.js, C/C++.

DuckDB ably supports Higher-level SQL programming such as Macros, Sequences, Window Functions.

Get sample data from Yellow Cab

https://www.nyc.gov/site/tlc/about/tlc-trip-record-data.page

Copy yellow cabs data into yellowcabs folder

create table taxi_trips as select * from "yellowcabs/*.parquet";

SELECT

PULocationID,

EXTRACT(HOUR FROM tpep_pickup_datetime) AS hour_of_day,

AVG(fare_amount) AS avg_fare

FROM

taxi_trips

GROUP BY

PULocationID,

hour_of_day;

Extensions

https://duckdb.org/docs/extensions/overview

INSTALL json;

LOAD json;

select * from demo.json;

describe demo.json;

Load directly from HTTP location

select * from 'https://raw.githubusercontent.com/gchandra10/filestorage/main/sales_100.csv'

#duckdb #singlefiledatabase #parquet #tools #cli

[Avg. reading time: 8 minutes]

JQ

- jq is a lightweight and flexible command-line JSON processor.

- Reads JSON from stdin or a file, applies filters, and writes JSON to stdout.

- Useful when working with APIs, logs, or config files in JSON format.

- Handy tool in Automation.

- Download JQ CLI (Preferred) and learn JQ.

- Use the VSCode Extension and learn JQ.

Download the sample JSON

https://raw.githubusercontent.com/gchandra10/jqtutorial/refs/heads/master/sample_nows.json

Note: As this has no root element, '.' is used.

1. View JSON file in readable format

jq '.' sample_nows.json

2. Read the First JSON element / object

jq 'first(.[])' sample_nows.json

3. Read the Last JSON element

jq 'last(.[])' sample_nows.json

4. Read top 3 JSON elements

jq 'limit(3;.[])' sample_nows.json

5. Read 2nd & 3rd element. Remember, Python has the same format. LEFT Side inclusive, RIGHT Side exclusive

jq '.[2:4]' sample_nows.json

6. Extract individual values. | Pipeline the output

jq '.[] | [.balance,.age]' sample_nows.json

7. Extract individual values and do some calculations

jq '.[] | [.age, 65 - .age]' sample_nows.json

8. Return CSV from JSON

jq '.[] | [.company, .phone, .address] | @csv ' sample_nows.json

9. Return Tab Separated Values (TSV) from JSON

jq '.[] | [.company, .phone, .address] | @tsv ' sample_nows.json

10. Return with custom pipeline delimiter ( | )

jq '.[] | [.company, .phone, .address] | join("|")' sample_nows.json

Pro TIP : Export this result > output.txt and Import to db using bulk import tools like bcp, load data infile

11. Convert the number to string and return | delimited result

jq '.[] | [.balance,(.age | tostring)] | join("|") ' sample_nows.json

12. Process Array return Name (returns as list / array)

jq '.[] | [.friends[].name]' sample_nows.json

or (returns line by line)

jq '[].friends[].name' sample_nows.json

13. Parse multi level values

returns as list / array

jq '.[] | [.name.first, .name.last]' sample_nows.json

returns line by line

jq '.[].name.first, .[].name.last' sample_nows.json

14. Query values based on condition, say .index > 2

jq 'map(select(.index > 2))' sample_nows.json

jq 'map(select(.index > 2)) | .[] | [.index,.balance,.age]' sample_nows.json

15. Sorting Elements

# Sort by Age ASC

jq 'sort_by(.age)' sample_nows.json

# Sort by Age DESC

jq 'sort_by(-.age)' sample_nows.json

# Sort on multiple keys

jq 'sort_by(.age, .index)' sample_nows.json

Use Cases

curl -s https://www.githubstatus.com/api/v2/status.json

curl -s https://www.githubstatus.com/api/v2/status.json | jq '.'

curl -s https://www.githubstatus.com/api/v2/status.json | jq '.status'

#jq #tools #json #parser #cli #automation

[Avg. reading time: 5 minutes]

SQLite

Its a Serverless - Embedded database. Database engine is a library compiled into your Application.

-

The entire database is one file on disk.

-

It’s self-contained - needs no external dependencies.

-

It’s the most widely deployed database in the world.

How It’s Different from “Big” Databases

- No client-server architecture - your app directly reads/writes the database file

- No network overhead - everything is local file I/O

- No configuration - no setup, no admin, no user management

- Lightweight - the library is only a few hundred KB

- Single writer at a time - multiple readers OK, but writes are serialized

Key Architectural Concepts

ACID Properties:

- Transactions are atomic, consistent, isolated, durable

- Even if your app crashes mid-write, database stays consistent

Locking & Concurrency:

- Database-level locking (not row or table level like PostgreSQL)

- Write transactions block other writers

- This is fine for mobile/embedded, problematic for high-concurrency servers

Storage & Pages:

- Data stored in fixed-size pages (default 4KB)

- Understanding page size matters for performance tuning

When to Use SQLite

- Mobile apps (iOS, Android)

- Desktop applications

- Embedded systems (IoT devices, cars, planes)

- Small-to-medium websites (< 100K hits/day)

- Local caching

- Application file format (instead of XML/JSON)

- Development/testing

When not to Use SQLite

- High-concurrency web apps with many simultaneous writers

- Distributed systems needing replication

- Client-server architectures where you need central control

- Applications requiring fine-grained access control

Performance Characteristics

- Extremely fast for reads

- Very fast for writes on local storage

- Slower on network drives (NFS, cloud mounts)

- Indexes work like other databases - crucial for query performance

- Analyze your queries - use EXPLAIN QUERY PLAN

Demo

git clone https://github.com/gchandra10/python_sqlite_demo

[Avg. reading time: 5 minutes]

Introduction

MLflow Components

MLflow Tracking

- Logs experiments, parameters, metrics, and artifacts

- Provides UI for comparing runs and visualizing results

- Supports automatic logging for popular ML libraries

Use case: Track model performance across different hyperparameters, compare experiment results

MLflow Projects

- Packages ML code in reusable, reproducible format

- Uses conda.yaml or requirements.txt for dependencies

- Supports different execution environments (local, cloud, Kubernetes)

Use case: Share reproducible ML workflows, standardize project structure

MLflow Models

- Standardizes model packaging and deployment

- Supports multiple ML frameworks (scikit-learn, TensorFlow, PyTorch, etc.)

- Enables model serving via REST API, batch inference, or cloud platforms

Use case: Deploy models consistently across environments, A/B test different model versions

MLflow Model Registry

- Centralized model store with versioning and stage management

- Tracks model lineage and metadata

- Supports approval workflows and access controls

Use case: Manage model lifecycle from staging to production, collaborate on model deployment

Common Use Cases

Experiment Management

- Compare model architectures, hyperparameters, and feature engineering approaches

- Track training metrics over time and across team members

Model Deployment

- Package models for consistent deployment across dev/staging/prod

- Serve models as REST endpoints or batch processing jobs

Collaboration

- Share reproducible experiments and models across data science teams

- Maintain audit trail of model development and deployment decisions

MLOps Workflows

- Automate model training, validation, and deployment pipelines

- Integrate with CI/CD systems for continuous model delivery

MLflow works well as a lightweight, open-source solution that integrates with existing ML workflows without requiring major infrastructure changes.

[Avg. reading time: 4 minutes]

MLflow Experiment Structure

A typical Chemistry experiment we did in school days.

| Experiment (ML Project) | Run # | Inputs (Parameters) | Process (Code/Recipe) | Outputs (Artifacts) | Metrics (Results) |

|---|---|---|---|---|---|

| Acid + Base Reaction | Run 1 | Acid=10ml, Base=5ml | Stirred 2 mins, room temp | Beaker with solution | pH=7.0 |

| Acid + Base Reaction | Run 2 | Acid=10ml, Base=7ml | Stirred 2 mins, room temp | Beaker with solution | pH=6.2 |

| Acid + Base Reaction | Run 3 | Acid=10ml, Base=7ml | Stirred 5 mins, heat | Beaker with solution | pH=6.0, Color=yellow |

- Experiment → Group of related trials (like a project or ML task).

- Run → One trial with a unique ID (just like a single lab experiment entry).

- Inputs (Parameters) → Model hyperparameters (learning rate, batch size, etc.).

- Process (Code/Recipe) → Training code or pipeline steps.

- Outputs (Artifacts) → Models, plots, datasets, or serialized files.

- Metrics (Results) → Accuracy, loss, F1-score, etc.

MLflow

│

├── Experiment A

│ ├── Run 1

│ │ ├── Parameters

│ │ ├── Metrics

│ │ ├── Artifacts

│ │ └── Tags

│ ├── Run 2

│ │ ├── Parameters

│ │ ├── Metrics

│ │ ├── Artifacts

│ │ └── Tags

│ └── Run 3

│ ├── Parameters

│ ├── Metrics

│ ├── Artifacts

│ └── Tags

│

└── Experiment B

├── Run 1

├── Run 2

└── Run N

git clone https://github.com/gchandra10/uni_multi_model.git

[Avg. reading time: 8 minutes]

Why MLflow

MLflow provides comprehensive support for traditional ML workflows, making it effortless to track experiments, manage models, and deploy solutions at scale.

Key Features

Intelligent (Auto)logging

- Simple Integration for scikit-learn, XGBoost, and more

- Automatic Parameter Capture (logs all model hyperparameters without manual intervention)

- Built-in Evaluation Metrics (automatically computes and stores relevant performance metrics)

- Model Serialization (handles complex objects like pipelines seamlessly)

Compare Model Performance Across Algorithms

-

Save Time: No more manually tracking results in spreadsheets or notebooks

-

Make Better Decisions: Easily spot which algorithms perform best on your data

-

Avoid Mistakes: Never lose track of promising model configurations

-

Share Results: Team members can see all experiments and build on each other’s work

-

Visual charts comparing accuracy, precision, recall across all your models

-

Sortable tables showing parameter combinations and their results

-

Quick filtering to find models that meet specific performance criteria

-

Export capabilities to share findings with stakeholders

Flexible Deployment

- Real-Time Inference for low-latency prediction services

- Batch Processing for large-scale scoring jobs

- Edge Deployment for offline and mobile applications

- Containerized Serving with Docker and Kubernetes support

- Cloud Integration across AWS, Azure, and Google Cloud platforms

- Custom Serving Logic for complex preprocessing and postprocessing requirements

Capabilities

Tracking Server & MLflow UI

Start a new project

VSCode, Open Workspace

Open Shell 1 (Terminal/GitBash)

uv init mlflow_demo

cd mlflow_demo

uv add mlflow pandas numpy scikit-learn matplotlib

Option 1: Store MLflow details in Local Machine

mlflow server --host 127.0.0.1 --port 8080

Open this URL and copy the file to your VSCode

https://github.com/gchandra10/uni_multi_model/blob/main/01-lr-model.py

Open Shell 2

Step Activate Virtual Environment

python 01-lr-model.py

Open your browser and goto http://127.0.0.1:8080

View the Experiment

Option 2: Store MLflow details in a Local Database

mlflow server --host 127.0.0.1 --port 8080 \

--backend-store-uri sqlite:///mlflow.db

Option 3: Store MLflow details in a Remote Database

export AWS_PROFILE=your_profile_name

mlflow server --host 127.0.0.1 --port 8080 \

--default-artifact-root s3://yourbucket

--backend-store-uri 'postgresql://yourhostdetails/'

Model Serving

Open Shell 3

Optional Step Activate Virtual Environment

export MLFLOW_TRACKING_URI=http://127.0.0.1:8080

mlflow models serve \

-m "models:/Linear_Regression_Model/1" \

--host 127.0.0.1 \

--port 5001 \

--env-manager local

Real Time Prediction

Open Shell 4

Optional Step Activate Virtual Environment

curl -X POST "http://127.0.0.1:5001/invocations" \

-H "Content-Type: application/json" \

--data '{"inputs": [{"ENGINESIZE": 2.0}, {"ENGINESIZE": 3.0}, {"ENGINESIZE": 4.0}]}'

OR

curl -X POST http://127.0.0.1:5001/invocations \

-H "Content-Type: application/json" \

-d '{

"dataframe_split": {

"columns": ["ENGINESIZE"],

"data": [[2.0],[3.0],[4.0]]

}

}'

#mlflow #serving #mlflow_server

[Avg. reading time: 5 minutes]

YAML

Introduction

- YAML Ain’t Markup Language.

- Human-readable alternative to JSON.

- Indentation is very key. (like Python)

- Used for configuration, not for programming logic.

Key Principles

- Whitespace indentation -> hierarchy

- Colon (:) -> Key Value Pair

- Dash (-) -> List Item

- Comments (#)

Use Cases in MLOps

- MLflow experiment configs (parameters, environments)

- Kubernetes -> Pods, Services, Deployments

- Docker Compose -> multi-container setups

- CI/CD pipelines -> GitHub Actions, GitLab CI, Azure DevOps

{

"experiment": "CO2_Regression",

"params": {

"alpha": 0.1,

"max_iter": 100

},

"tags": ["linear_regression", "mlflow"]

}

experiment: CO2_Regression

params:

alpha: 0.1

max_iter: 100

tags:

- linear_regression

- mlflow

YAMLLint OR VSCode YAML Validator Extension

YAML Data Structures

Scalars (strings, numbers, booleans)

learning_rate: 0.01

early_stopping: true

experiment_name: "CO2_Prediction"

Lists

models:

- linear_regression

- random_forest

- xgboost

Dictionaries (maps)

params:

n_estimators: 100

max_depth: 5

Description

description: |

This is a multi-line string.

It preserves line breaks.

Useful for comments/description/notes.